USING MACHINE LEARNING TO PREDICT PRICE DISPERSION

AARON BODOH-CREED, J

¨

ORN BOEHNKE, AND BRENT HICKMAN

Abstract. Theory suggests two sources of price dispersion amongst homogenous goods: market

frictions or product heterogeneity. We collected posted-price listings for Kindle Fire tablets from

eBay to determine if listing heterogeneity can explain the high degree of dispersion we observe. Using

a basic set of controls and empirical techniques in line with the previous literature, we can explain

only 13% of variation in posted prices, which is also in keeping with previous research. However,

we can explain 42% of the dispersion by applying machine learning to a richer set of variables,

which we extract from raw downloaded HTML pages. We interpret this number as a bound on the

role of market frictions in driving price dispersion. Variables describing the amount of information

in the listings, the style of the listings, and the content of the listings’ text are effective price

predictors independently of one another. Our analysis suggests that the content of the listings’ text

plays a primal role in generating the predictions of the machine learning estimator. We repeat our

analysis on a cross-section of products across a variety of categories on eBay, including household

products, sporting goods, and other consumer electronics, and we find a comparable degree of price

predictability across all of the products.

1. Introduction

The “Law of One Price” (LOP) is a prediction from economic theory that all exchanges of

homogeneous goods in a thick, frictionless market ought to take place at a single price. However,

the LOP fails to describe reality in many settings, a fact that was pithily summarized by Hal

Varian, who wrote “the law of one price is no law at all” (Varian 1980 [51]).

In the infancy of online shopping it was thought that the LOP might be more realistic in on-

line markets due to heavy participation by buyers and sellers and database technology that would

seem to make product search as frictionless as possible. To the contrary, however, non-trivial price

dispersion online quickly became a well documented fact, even for products that appear homoge-

neous, such as new books (e.g., Bailey 1998 [6], Brynjolfsson and Smith 2000 [16]). In a model with

rational buyers and sellers, price variation for seemingly homogeneous products can arise from two

sources. First, it could be that units of a given product are actually heterogeneous in subtle ways

that are apparent to consumers, but difficult for researchers to detect in the data. For example,

sellers may bundle complementary objects like accessories or a warranty as means for differentiat-

ing an otherwise homogenous product. Second, market frictions (e.g., search costs or informational

asymmetries) combined with strategic competition between sellers could endogenously generate

Key words and phrases. Online Markets, Price Dispersion, Machine Learning

JEL subject classification: D4, D43, L86

.

1

2 AARON BODOH-CREED, J

¨

ORN BOEHNKE, AND BRENT HICKMAN

equilibrium price dispersion for homogeneous products.

1

Since the various search friction models

imply pricing noise which is plausibly orthogonal to observable characteristics (e.g., mixed pricing

strategies, see Baye, Morgan and Scholten 2006 [11]), any predictive power to be found places a

bound on the role that search frictions could play.

If observable product heterogeneity can explain price dispersion, then in principle it should be

possible to identify features of the product listings that predict the listings’ prices. Our goal is to

maximize the fraction of the price variability that can be explained by product heterogeneity (and

hence need not be explained by market frictions) using richer data in combination with machine

learning methodologies. We analyze a unique and very detailed dataset consisting of posted-price

listings for new Amazon Kindle Fires culled from the eBay marketplace. The dataset includes the

entire HTML code for each listing, so we can observe essentially everything the buyer sees with the

exception of the visual content of the non-stock photos. This provides a rich set of data on which

to estimate our preferred machine learning model, a random forest (Breiman 2001 [15]). These two

contributions, the richer dataset and the use of machine learning, are meant to solve two potential

problems—omitted variable bias and functional form mis-specification—which may have limited

price prediction power in previous empirical studies. Although our empirical model is predictive in

nature, for our purposes we need not identify a causal demand system in order to parse between

product heterogeneity and market frictions as sources of price dispersion.

There are several reasons that we are interested in investigating the magnitude of search fric-

tions on eBay. Market frictions cause waste in terms of user time and effort spent searching, which

could dissuade potential buyers from using the platform. Second, market frictions generated by

strategic competition between sellers result in rents for the firms. Since controlling the balance

of rents received by buyers and sellers is an important strategic decision for competing platforms,

understanding these frictions is a crucial aspect of platform design (Rochet and Tirole 2003 [42]).

In either case, alleviating (or at least controlling) these market frictions is important for platform

service providers. Peer-to-peer platform markets are becoming more prevalent in the online econ-

omy. Examples include Upwork, a platform for recruiting freelance workers; Match.com, a platform

for finding potential romantic partners; and StubHub, a market for buying and selling tickets to

live events. Since eBay is a mature and well established platform, one would expect newer online

markets to exhibit at least the same degree of frictions.

From a theoretical perspective, we are interested in the source of price variation on eBay in

order to test basic models of price formation in perfectly competitive markets. eBay’s posted-price

market for new, first-generation Amazon Kindle Fire tablets, which we refer to simply as “Kindles,”

closely resembles the canonical model of a perfectly competitive marketplace. The eBay market

is quite thick, with thousands of buyers and sellers interacting regularly. In addition, many of

the obvious sources of product heterogeneity are ruled out in our setting. For example, bundling

of new Kindles with accessories is rare in the data, and when present the accessories are of low

value. Seller reliability might induce product heterogeneity, but eBay’s strong warranty against

seller misbehavior should eliminate this as a first-order concern for buyers. These features suggest

1

We discuss the various theories for how market frictions generate price dispersion in Section 2.

PREDICTING PRICE DISPERSION 3

that consumers ought to view the various seller listings as near-perfect substitutes. Yet, we find

that the standard deviation of the price for new Kindles on eBay is 21.2% of the mean price.

2

In order to provide a theoretical benchmark for our analysis, online Appendix B provides a

simple model of a dynamic, frictionless posted-price setting with profit maximizing sellers that

have heterogeneous reservation values (or, alternatively, storage costs). We show that if there is

variation in the day-to-day market clearing price, then all sellers ought to choose a price near the

top of the support of the distribution of market-clearing prices. The intuition is rather simple:

if sellers are patient and the storage costs are not outlandishly large, then patient sellers ought

to be willing to wait for their listing to sell for a high price on a day when the market-clearing

price is idiosyncratically high. However, if all sellers behave in this manner, then there cannot be

nontrivial variation in the market-clearing price. Since price dispersion clearly exists in the real

world, some assumption of our model must be violated. Our analysis focuses on the roles of product

heterogeneity and market frictions in driving price variation, but there are other possibilities that

we do not view as plausible given the features of the eBay platform and the fact that the Kindle is

a small consumer electronics device. For example, the sellers could be impatient or have very high

storage costs, which seems unlikely for a small consumer electronics product. The sellers might not

have rational expectations, but 90 days of prior listings with the sales outcomes are available on

the platform to inform seller expectations. Sellers could also price in a non-profit-maximizing (i.e.,

irrational) fashion or make mistakes, but we feel it is unlikely that this is driving the behavior of

a sizable fraction of the sellers, many of whom have an extensive eBay participation history.

It is worth taking a moment to identify what distinguishes the eBay posted price market for

Kindles from other markets for homogenous products. For example, spot markets for commodities

(e.g., gasoline) exhibit substantial price variation. In reality, these markets contain liquidity traders

that have a need to transact in the near-term that, in effect, renders them “impatient.” For

example, oil refiners pay significant storage costs for their products, which makes them impatient

sellers. While it is easy to imagine time constraints that could make buyers on eBay impatient,

such as the need to purchase a present for a quickly approaching holiday, it is hard to see why

sellers would be eager to be rid of an easy-to-store, relatively inexpensive electronics product when

waiting might bring a significantly higher price.

Our raw data consist of 1,298 downloaded HTML pages listings for new Kindles on eBay. These

pages allow us to see virtually all information displayed to the buyer. The first portion of each

listing’s webpage includes the seller-supplied title and photos of the product, the price and ship-

ping cost, and a measure of the seller’s reputation computed by eBay. The second section is a

standardized description of the product, provided by eBay, that concisely spells out the technical

features of the Amazon Kindle, as well as eBay’s definition of a “new product.” The third section

2

One possible concern is that perhaps many eBay sellers incorrectly list used items as “new,” but this does not appear

to be a meaningful problem in our dataset. A manual inspection of 200 listings revealed 78 listings that explicitly

mentioned that the item was factory sealed, three listings suggesting the box had been opened, and the remaining

listings either had no seller customized description or did not explicitly repeat the definition of a “new” item beyond

what eBay provides as a standard description for new Kindles. We found no examples of items with significant usage

prior to listing the item for sale.

4 AARON BODOH-CREED, J

¨

ORN BOEHNKE, AND BRENT HICKMAN

of each listing displays additional, customizable information provided by the seller and can include

additional photos and/or formatted textual descriptions.

The information contained in the first and third sections is almost entirely at each seller’s dis-

cretion and is highly variable across listings. We captured all text information the seller provided

about the product, as well as the number, size(s), and type (stock or non-stock) of the photos the

seller posted in his or her listing. We find that the item description provided by the sellers varies

widely from listing to listing. For example, the listings had an average of 4.09 photos with a stan-

dard deviation of 4.39. Listings also had an average of 131 words of text written by the seller, but

the standard deviation of the number of words is 280 and 16% of listings include no seller-provided

description at all. We also parse the content of the text using a bag-of-words (BoW) approach,

leaving us with a total of 220 regressors that characterize each of the Kindle listings in our dataset.

Our first goal is to assess the amount of price variation we can explain by applying machine

learning techniques to these high-dimensional observables. The existing literature has made little

headway in explaining online price variation, but we investigate whether this is because previous

studies have ignored some information observed by the user (e.g., our text and image variables),

inducing an omitted variable bias, or whether the cues that consumers extract from these data

manifest themselves in complex and subtle ways that are masked by restrictive functional forms

used in previous studies (e.g., ordinary least squares versus machine learning models), or both.

To address this question, we first construct a restricted dataset using only regressors comparable

to those employed in the prior literature. We measure the independent importance of our richer

dataset by comparing the explanatory power of a given model estimated on the restricted data to

the explanatory power of the same model estimated on the full dataset. The importance of the

model employed is assessed by comparing the predictive power of the two models estimated on the

same dataset. Throughout we measure price predictability using a modified form of the R

2

statistic

that is applicable to both OLS and the random forest algorithm. We can explain 13% of the price

variation using an ordinary least squares (OLS) model and our basic dataset, which is in line with

the weak predictive power observed in the previous literature.

3

An OLS model estimated on our

full set of variables explains 19% of the price variation, meaning the rich set of regressors alone

improves the predictive power of OLS, but only slightly.

We then examine the predictive power of an alternative model based on a random forest (Breiman

2001 [15]).

4

Much like a k-nearest neighbor or a kernel-smoothed regression, a random forest uses

observations that are near the point of interest to generate a localized prediction. A single regression

tree uses a data-driven algorithm to partition the space of regressor values to define what “near the

point of interest” means. Then one level up, a random forest averages the predictions of an ensemble

of regression trees to make a prediction. Random forests have proven popular due to their ability

3

See Section 2 for a brief discussion. This comparison with the previous literature is not intended as a model

selection exercise for many reasons (e.g., the differing datasets). Rather, we wish to make the simpler point that the

vast majority of observed price variation remains unexplained if one relies on OLS techniques and basic observables,

as in the prior literature.

4

We also experimented with other methodologies such as neural networks and boosted gradient trees, but we found

these more complex techniques performed no better than a random forest.

PREDICTING PRICE DISPERSION 5

to capture complex interactions between large sets of regressors in a principled way that allows

for relatively little subjective input from the analyst regarding model selection. When we apply

random forest techniques to the basic dataset, we can explain 20% of the price variation. When we

estimate a random forest model using our full dataset, our explanatory power increases to roughly

42% of the price dispersion. The explained price variation is economically significant at over 10%

of the mean price of a new Kindle. In short, both high-dimensional observables and sophisticated

machine learning techniques are required in tandem to adequately capture the complex process of

information transmission between buyers and sellers that leads to explainable price dispersion.

One possible criticism of our OLS approach is that we may have handicapped standard linear

models by estimating an insufficiently flexible model. To explore this possibility, we build a

dataset that includes a complete set of first-, second-, and third-order interactions of our full set

of regressors, which results in a model with 6,463 variables. After using LASSO (Tibshirani 1996

[50]) to choose our regressors, we find that the linear model still explains only 33% of the variation

in prices. Our conclusion is that while a more flexible linear model can (unsurprisingly) predict

a greater degree of price variation, the model would have to be impractically flexible to begin to

approach the capabilities of machine learning methods.

Another important question is whether our results are somehow contingent on the particular

product or product category we are examining. In Section 5.6, we repeat our analysis on a set of

listings for the Microsoft Surface tablet that we scraped at the same time as the Kindle listings.

We find that we can predict 44% of the price variation across the Surface listings. Section 5.7

analyzes listings for 12 products from across several different product categories, all of which were

scraped in 2018. We can predict between 27% and 67% of the price variation for these 12 products

using machine learning and our rich set of observables. Across these disparate product categories,

our results point to two robust qualitative findings. First, price predictive power using OLS and

the basic, traditional set of observables falls far short of the predictive power of maching learning

and our richer observables. Second, it is the combination of the richer data with the more flexible

methodology that is required to achieve full predictive power, as neither suffices on its own. The

fraction of price variation we explain is economically significant since the standard deviation of the

listings’ prices is between 15% and 63% of the mean price. In short, high price predictability seems

to be common on eBay so long as sufficiently rich data are available and flexible predictors are

used.

One common drawback of machine learning is that with its impressive flexibility comes greater

difficulty in interpreting results. In order to better understand the sources of the predictive power

we uncovered, we partition our variables into intuitive subsets that are likely to measure the amount

of information conveyed (e.g., the volume of text and number of images), variables that represent

the style of the listing (e.g., text style and formatting), and BoW variables that describe the

meaning of the listings’ text. In order to pin down which combinations of variables are providing

the predictive power, we analyze the effect of adding different groups of variables to our basic dataset

and deleting different sets of variables from our full dataset. We find that we can generate accurate

price prediction models using each subset of our data, which implies that the different subsets

6 AARON BODOH-CREED, J

¨

ORN BOEHNKE, AND BRENT HICKMAN

contain redundant information. We use a variable importance test to assess which variables are

used most heavily by our random forest, and we find that the BoW variables are most important.

It is easy to come up with an information-based explanation for how the volume of information

or the content of a listing’s text predicts a higher or lower price (e.g., explaining a defect in

the packaging), and these communications are credible because of the incentive sellers have to

maintain good reputations (Cabral and Horta¸csu 2010 [18]). We find that we lose only a small

amount of predictive power by estimating our model on only the basic dataset plus the variables

that summarize the volume of information conveyed. We also find that the style variables (e.g., the

number of HTML tags used in a listing) have as much explanatory power as the variables describing

the volume of the information conveyed by the listing. The style of a listing can convey powerfully

to a potential buyer that the seller is a professional—the design of such a listing is costly, but

professional sellers can defray this cost by repeatedly using the same listing template. We argue

in Section 4.3 that inexperienced sellers cannot simply copy another seller’s stylized listing that

has been tuned to increase the sale price without paying a substantial cost in terms of effort. The

combination of these effects makes the style of a listing a credible signal of professionalism.

At the end of the day, however, we find that significant unexplained price variation persists,

suggesting that search frictions also play an economically meaningful role. This may seem counter

to expectations, given the cutting edge search algorithms at eBay users’ disposal, but one possible

explanation is an “embarrassment of riches” problem. Given the sheer scope of the marketplace, it

may be that there are so many relevant results for a keyword search on the phrase “Amazon Kindle

Fire” that it is still costly for consumers to sift through all of them.

The remainder of this paper has the following structure. We start with a discussion of the related

literature in Section 2. Section 3 provides a description of the mechanics of the eBay posted-price

market and describes the listings that we study. Section 4 describes the data we collected. Section 5

presents basic results on the importance of (i) the richness of our dataset and (ii) flexible estimation

techniques for price prediction, discusses robustness checks, and assesses generalizability. Section

6 explores the underlying structure of the data that is captured by our random forest models. We

conclude and discuss some plausible interpretations of the remaining price dispersion in Section

7. Appendix A provides robustness checks to eliminate various alternative interpretations for our

results. Appendix B provides a model of seller behavior in this market, which we include to highlight

the assumptions required for price dispersion to vanish.

2. Related Literature

Lewis (2011 [34]) conducts an exercise similar to ours in that he examines whether the presence

of phrases associated with vehicle quality (e.g., “dent”) influence the final price received in an

eBay Motors vehicle auction. The goal of the analysis is to assess whether these phrases alleviate

adverse selection, which the results support. Our study and Lewis (2011 [34]) share the goal of

assessing the informational content of the listings. However, Lewis (2011 [34]) focuses on explaining

price dispersion amongst heterogeneous products, whereas we focus on predicting price dispersion

PREDICTING PRICE DISPERSION 7

between putatively homogenous products. In addition, we use a much larger set of observables and

apply machine learning techniques to generate our price predictions.

Price dispersion as a consequence of ignorance has been recognized at least since Stigler (1961

[49]). Building on Stigler’s original model of costly search, Diamond (1971 [21]) proved that

profit maximizing firms can act as monopolists if consumers face search costs. Although the

model of Diamond (1971 [21]) does not yield equilibrium price dispersion, it does show that large

deviations from the perfectly competitive outcome are possible if consumers face small search costs.

Reinganum (1979 [41]) shows that price dispersion can arise when consumers discover prices through

a process of sequential search and firms have heterogeneous marginal costs. MacMinn (1980 [35])

shows that price dispersion can also arise under this market structure when fixed-sample search is

used. The core insight of all of these models is that the search cost enables firms to price above

marginal cost, an effect which is then amplified through strategic interaction amongst the firms.

The price dispersion is generated by the mixed strategies firms use when setting prices.

A second potential source of price dispersion is information asymmetries amongst consumers.

These models assume that firms are homogenous, but buyers are asymmetrically informed either

because of heterogeneous buyer search costs (e.g., Salop and Stiglitz 1977 [45], Rosenthal 1980

[43], Wilde and Schwartz 1979 [53], Varian 1980 [51]) or because of heterogeneous outcomes of a

stochastic search process (e.g., Burdett and Judd 1983 [17]). The firms respond to the asymmet-

rically informed consumers by playing a mixed pricing strategy that generates equilibrium price

dispersion. The more recent literature has applied models of this form to study online price clear-

inghouses as important strategic actors in the affected markets (e.g., Baye and Morgan 2001 [8],

Baye et al. 2006 [11]). We do not believe that these models provide a realistic description of eBay,

with its many participants connected by a common online platform, since they assume sellers have

a captive market of buyers that are either uninformed about the prices of competitors (e.g., Salop

and Stiglitz 1997 [45], Rosenthal 1980 [43], Wilde and Schwartz 1979 [53], Varian 1980 [51]) or are

loyal customers of the firm (Baye and Morgan 2001 [8]).

Although eBay’s web-based, interactive search services would seem, at first glance, to make it

easy to obtain a price quote, it may be costly for the user to parse the search results if the listings

are heterogeneous. The existence of search frictions on eBay has been established in the various

auction markets that eBay runs. Bodoh-Creed, Boehnke, and Hickman (2017 [14]) estimate that

bidders in eBay auctions have a participation cost of $0.07 per bid placed. Backus, Podlow, and

Schneider (2014 [5]) and Schneider (2016 [46]) explicitly test for search frictions by exploiting the

algorithm eBay uses to order the listings served to buyers. As both use a similar methodology, we

discuss the earlier of these papers here. The authors analyze a sample of new DVDs for sale on

eBay and show that including the word “new” in the title of the listing makes it more visible, as

these listings are seen both by buyers that search for the DVD’s title (e.g., “Batman Begins”) and

those that explicitly search for the item (i.e., “Batman Begins new”). The increased visibility of

adding the word “new” results in a 3.5% higher probability of sale and an $0.83 higher sale price

conditional on sale. We argue in Section A.2 that this sort of manipulation of the search algorithm

is not driving our empirical results.

8 AARON BODOH-CREED, J

¨

ORN BOEHNKE, AND BRENT HICKMAN

A large branch of the more recent empirical literature on price dispersion has focused on tests

of various models. For example, Sorenson (2000 [48]) shows that pharmaceutical products that

necessitate repeated purchases have lower price variation since the consumers have a strong incen-

tive to find a low price. Baye, Morgan, and Scholten (2004 [9]) and (2004 [10]) use data from a

price comparison web site and data on the market structure across different products to test the

implications of information clearinghouse models. Baylis and Perloff (2002 [13]) find a combina-

tion of high-quality, low-priced firms competing with low-quality, high-priced firms in the online

markets for scanners and digital cameras, which the authors interpret as support for the two-price

equilibrium predicted by Salop and Stiglitz (1977 [45]). Some papers estimate a structural model

to tease apart the sources of price variation (e.g., Hong and Shum 2006 [30]).

There are prior studies that attempt to predict product prices and report statistics that describe

their explanatory power, but many of the estimates have features that make them difficult to com-

pare with our results. Among the papers that are comparable to our project, Baye, Morgan, and

Scholten (2006 [12]) attempts to predict the price dispersion for online consumer electronics sales.

Their price regression can explain 17% of variation using regressors capturing attributes of the re-

tailers, but the explanatory power jumps to 72% when the regressions include firm-specific dummy

variables. Clay, Krishnan, and Wolfe (2001 [19]) attempts to predict prices and achieves a high

degree of explanatory power, but their regressions include time dummies. Time dummies explain

a great deal of the price variation across our sample due to product depreciation, but this price

variation is unrelated to the cross-sectional price dispersion generated by product heterogeneity.

Clay, Krishnan, and Wolfe (2002 [20]) provides an analysis of the price dispersion of text books

that explains 2.7% of the dispersion when regressions do not include store-level dummy variables

and 19.2% of the dispersion when the dummy variables are included. Pan, Ratchford, and Shankar

(2002 [38]) study the price dispersion across eight categories of retail products and can explain at

most 22% of the price dispersion, with the notable exception being that their regressions explain

43% of price variation for compact discs. Our general conclusion from the empirical literature is

that price dispersion is difficult to explain without including regressors such as seller-specific fixed

effects. Ancarani and Shankar (2004 [1]) find that internet retailers of books and compact discs have

lower price dispersions relative to traditional retailers. Ratchford, Pan, and Shankar (2003 [40])

use BizRate.com data covering a wide array of products to argue that price dispersion decreased

between 2000 and 2001, which the authors attribute to the market maturing.

Since price dispersion results from pricing strategy, the literature on online price setting and

competition is relevant. Lal and Sarvary (1999 [32]) challenged the assumption that internet com-

petition will lead to lower prices, and they formulate a model of how the internet can decrease price

competition. Lynch and Ariely (2000 [33]) find in an experiment that lowering the cost of infor-

mation reduced price sensitivity for differentiated products like wines. Shankar and Bolton (2004

[47]) find that attributes of competition explain most of the variance in retailer pricing strategies.

Even when seller dummy variables can explain a great deal of the price variation, it is unclear

what exactly the dummy variables are capturing. For example, suppose that one concludes that

Best Buy, a brick and mortar electronics retailer in the United States that also has an online store,

PREDICTING PRICE DISPERSION 9

has consistently higher prices than other electronics retailers. The higher prices at Best Buy could

be because the products are different (product heterogeneity), it could be that Best Buy offers

generous return policies (heterogeneous retailers), or that Best Buy has a near monopoly over brick

and mortar electronics sales in many regions that allows the firm to charge higher prices (market

competition).

One of the themes that emerge from our empirical results is that a variety of characteristics

of a listing’s style (e.g., the number of HTML tags used) can serve as signals of a seller’s profes-

sionalism. Elfenbein, Fisman, and McManus (2012 [26]) explicitly study whether assigning part

of an auction’s revenue to a charitable cause can act as a substitute for a high seller reputation.

Although thematically similar to our result, the study used quasi-experimental variation between

listings from the same seller to identify the effects.

Dinerstein et al. (2017 [22]) directly examines a redesign of the eBay platform meant to encourage

buyers to consider low-priced products and enhance price competition amongst sellers. Prior to

May 19, 2011, eBay showed buyers that searched for a product a list of “Best Match” results that

did not explicitly consider price when ordering the products displayed to the user. From May 2011

through the summer of 2012, eBay displayed the posted-price listings in order of increasing total

price. Starting in late 2012 (prior to our data collection period), eBay returned to using the “Best

Match” as the default. Dinerstein et al. (2017 [22]) estimate a model of consumer demand and

assume that users consider a random number of listings that are randomly selected based on either

the listing’s quality or the price under the redesigned platform. They show that price dispersion

decreases when the platform emphasizes low prices.

We would also like to highlight a handful of other papers that have worked directly with eBay

“Buy It Now” data. For example, Hui et al. (2016 [31]) studies the interaction between the effects of

reputational mechanisms and insurance against seller misbehavior on the prices received by sellers

in Buy It Now and auction listings on eBay. Saeedi and Sundaresan (2016 [44]) study a sample of

Buy It Now and auction listings on eBay to understand the effect of a change in the reputation

system on buyer and seller behavior. Other papers have studied the relationship between Buy It

Now postings and auctions with a particular focus on the economic forces that allow the two sales

mechanisms to coexist on the same platform (e.g., Einav et al. 2013 [23], Einav et al. 2016 [24],

Einav et al. 2015 [25]). Nosko and Tadelis (2015 [37]) documents that buyers’ experiences with

sellers spills over onto other sellers, and the authors propose a novel and more effective metric of

interaction quality. Elfenbein, Fisman, and McManus (2015 [27]) study the interaction of the value

of quality certification and market structure. To the best of our knowledge, we are the first to

use data from a platform like eBay to study price dispersion, utilize contextual data (e.g., text or

images) as rich as ours, or bring machine learning techniques to bear to explain price dispersion.

3. The eBay Setting

eBay uses a fine-grained, hierarchical product classification system for the goods listed for sale on

the platform. For example, all Kindles are in the “Tablets & eBook Readers” category, but there

also exists a separate category at the bottom of the hierarchy for new, first-generation Amazon

10 AARON BODOH-CREED, J

¨

ORN BOEHNKE, AND BRENT HICKMAN

Kindle fire tablets with 8 GB of storage. The classification system allows for heterogeneity within

broad product categories (e.g., tablet computers) and very limited product heterogeneity at the

narrowest level of classification.

We focus on the “Buy It Now” listings that use a posted-price format, which make up more than

half of all listings on eBay today. A seller using a posted-price format has the option to provide

title text and a photo that will appear in the page of search results observed by prospective buyers.

For consumer electronics products, the seller must also provide the exact specifications (e.g., 8 GB

of storage) and condition (e.g., “New”) of the product so that it can be placed within the eBay

product hierarchy. The price of the product as well as the shipping options must also be chosen.

The seller can either offer flat-rate shipping or choose to have shipping calculated by eBay. If the

shipping is calculated by eBay, a forecasted price for shipping is computed for a prospective buyer

based on the seller’s and the prospective buyer’s locations as well as package size, weight estimate,

and shipping company specified by the seller. Finally, the seller is allowed to choose the duration

of the listing from a discrete set of options (e.g., 3 days, 7 days, etc.).

In addition to supplying a platform for hosting posted-price listings, eBay provides payment

and sales infrastructure for the buyers and sellers. eBay also provides a money-back guarantee for

buyers, which can be triggered easily through the website and results in a rapid (less than five

days) refund of the money paid to the seller.

A buyer on the eBay site begins by searching for items using keywords and an optional selection of

which broad product category to search within. The user is then served a page of results. Although

eBay continuously experiments with how to order the items on the search page, conversations with

eBay employees during the time our data was collected (28 December 2012 - 20 September 2013)

indicated that items appeared early in the list of results based on (1) whether the listing’s title

included all of the keywords that the buyer included in his query and (2) the timing of the listing’s

termination, with listings that expire in the near future being closer to the top of the search results.

5

After the search results are generated, a buyer can click on a listing on the search results page to

see the webpage for a listing, reorder the search results by price, or view successive pages of search

results. Buying an item requires viewing the webpage for a listing, clicking a “Buy It Now” button

at the top of the listing webpage, and entering the required payment information.

The webpage for a listing that a potential buyer sees once he or she clicks on an item in the search

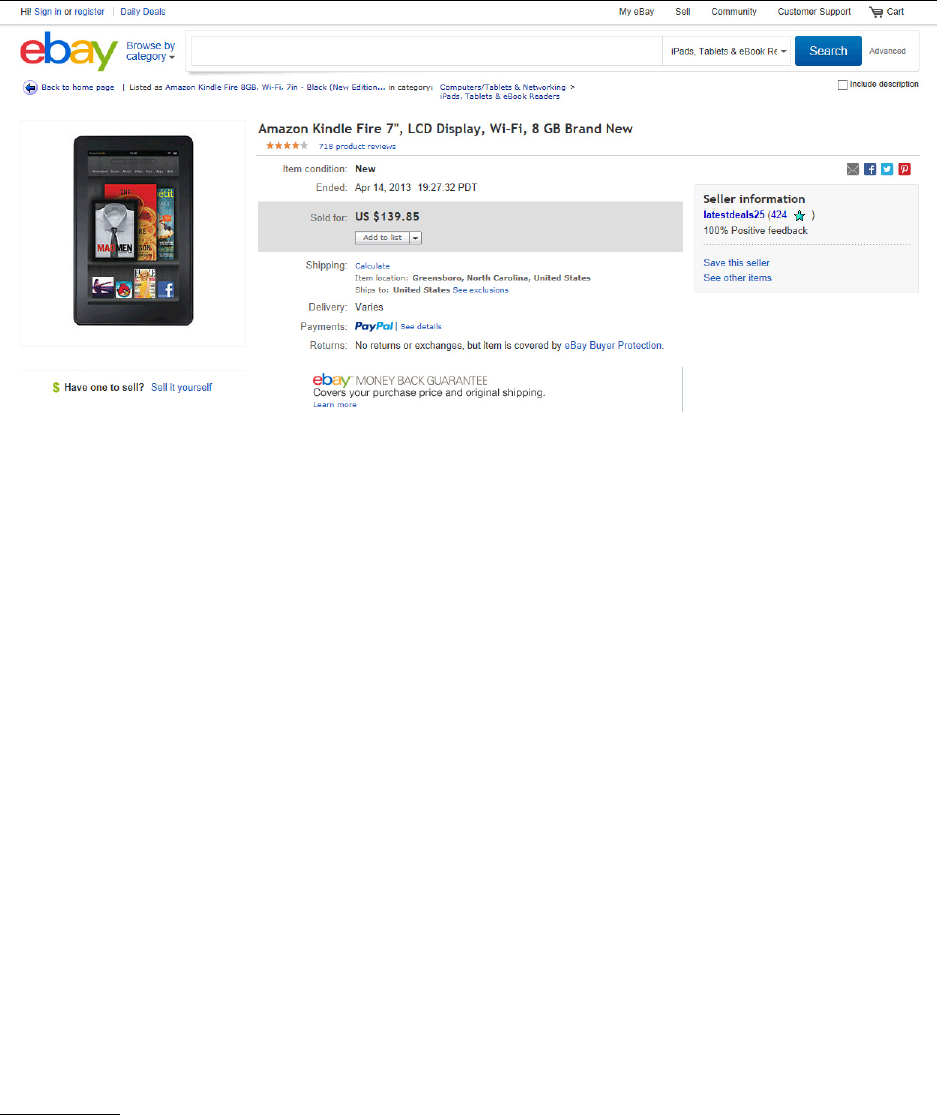

results page has a format with three sections. Figure 1 displays an example of the first section of

the listing, which we refer to as the title section. The title section includes a brief text description

of the item written by the seller and one or more photos that are provided by the seller. The

title section also has a standardized format that includes the price chosen by the seller, shipping

information, the item’s condition (e.g.,“New”), and a seller reputation score. The seller reputation

score is equal to the total number of positive buyer ratings minus the number of negative ratings.

The second section of each listing is a box that provides a standardized, uniformly formatted set

of information about the product that is provided by eBay, an example of which is depicted in Figure

5

We argue in Appendix A.2 that our results are not driven by the efforts of sellers to manipulate the prominence of

their listing in the search results through choice of the listing’s title.

PREDICTING PRICE DISPERSION 11

Figure 1. eBay’s Standardized Listing Information

2. The box describes the precise definition of the condition of the product and detailed technical

specifications of the product (e.g., CPU processor speed). Since the section is standardized across

our sample, it does not play a role in our analysis. However, the existence of this section shows

that there can be little ambiguity about either the product being sold or the product’s condition.

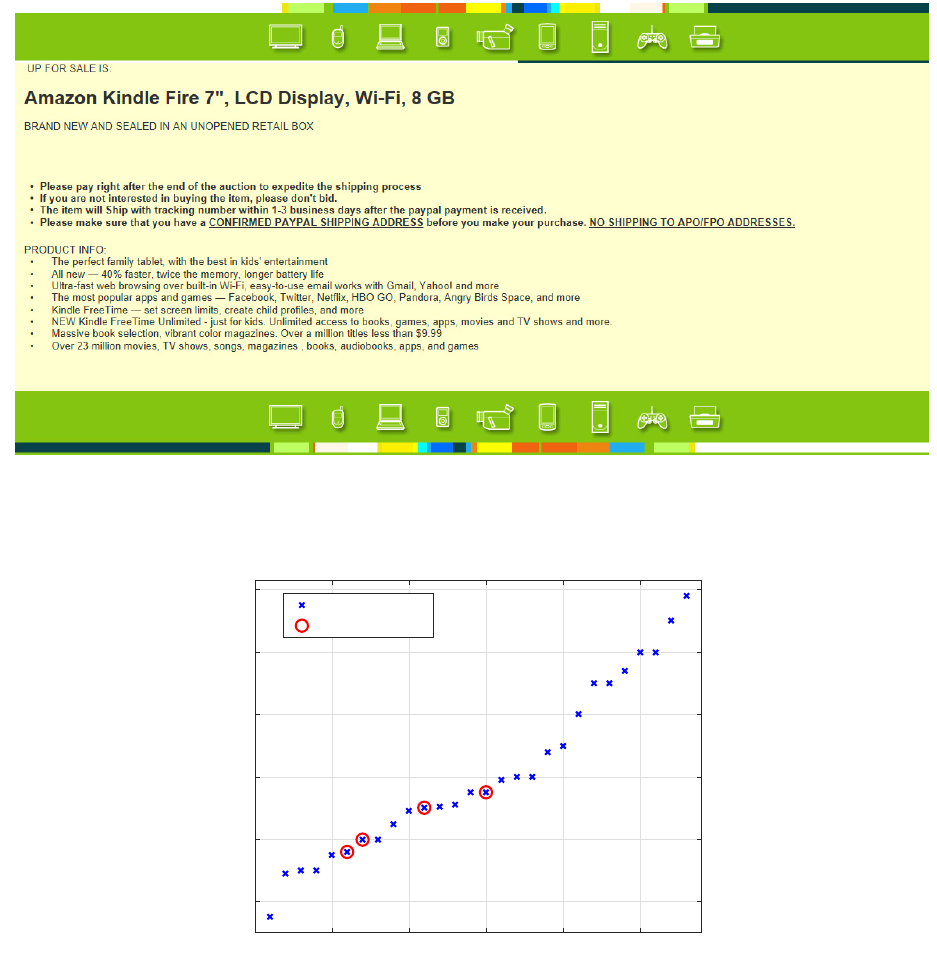

A fairly elaborate example of the third section of each listing, which we refer to as the description

section, is provided in Figure 3. This third section is created entirely by the seller and is optional,

with about 16% of listings in our sample having no content in the description. The seller has

the ability to provide a large amount of text and images, and the text can be formatted using

HTML tags (e.g., bolded text). The challenge for analyzing the information in the description is

condensing the many features of the text and images into data amenable to statistical analysis.

4. Data

Each data point is a single listing for a new, first-generation Amazon Kindle Fire. We collected

our posted-price listings using a scraping program that captured the listings from sellers located

within the United States that posted to the platform between December 28, 2012 and September

30, 2013.

6

Since our primary interest is the price setting behavior of sellers, we include listings in

our sample regardless of whether they resulted in a sale. We include only listings offering a single

unit, and we eliminated a small number of listings with implausible prices (i.e., below $15), which

yielded a total sample of I = 1, 298 listings. If a seller offered multiple listings across our sample, we

6

We searched in the “iPads, Tablets, and eBook Readers” category using the keywords “Amazon Fire.” This returned

all of the active listings that included either of these words. We did not include the word “Kindle” because this tended

to return listings for much older black and white Kindle e-readers, which were more numerous on the eBay site at the

time. After experimenting with various keyword combinations for our scraping algorithm, we found that the search

phrase “Amazon Fire” provided the best balance of specificity and breadth. This combination of search terms and

keywords allowed us to capture virtually all listings of Kindle Fire tablets on eBay during our sample period.

12 AARON BODOH-CREED, J

¨

ORN BOEHNKE, AND BRENT HICKMAN

Figure 2. eBay’s Standardized Description

treat each listing as a distinct data point. There are 911 unique sellers in our dataset, 5 of which

have 10 or more listings. The vast majority of sellers had very few listings: 79.5% of them had

a single listing and another 12.7% had 2 listings. As a robustness check we analyze the behavior

of sellers that offered multiple listings in Appendix A.3, where we argue that our main results are

invariant to inclusion of these listings.

One concern is that despite the items being listed as in “New” condition, the Kindles might

actually be used and in “Like New” condition. eBay requires that the seller confirm that his or her

product matches the eBay definition of a “New” product before posting the listing, so a mistake by

the seller is unlikely. A manual inspection of 200 listings revealed 78 that explicitly mentioned that

the item was factory sealed. Only three listings indicated that the Kindle had ever been opened

and included comments like “We opened the box and charged the unit & confirmed that it power

[sic] up ok.” Most of the remaining listings either had no description or did not explicitly repeat

the definition of a “New” item that eBay provides above the description. We found no examples

PREDICTING PRICE DISPERSION 13

Figure 3. Seller’s Customized Description

0 5 10 15 20 25

Units Available

120

140

160

180

200

220

Price

Unit Available

Unit Sold

Figure 4. Supply Curve on 23 June 2013

of items with significant usage prior to listing the item for sale, and eBay provides substantial

incentives for sellers not to blatantly lie in their descriptions.

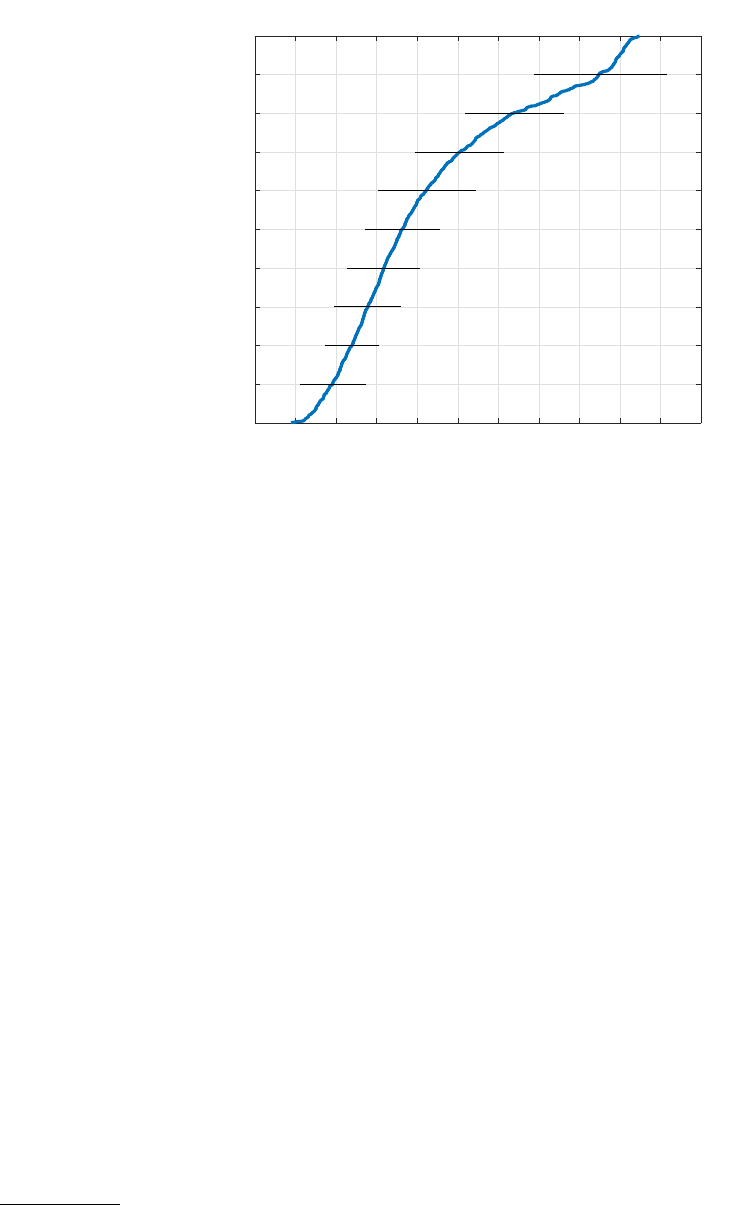

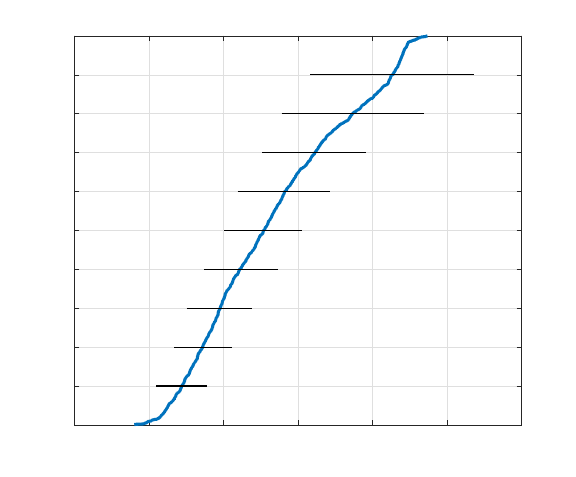

The median day in our sample period had just over two dozen active Kindle Fire listings. An

exemplar of a daily supply curve is shown in Figure 4. On a typical day the listings that result in

sales have lower than average prices, but there are usually higher priced listings that also generate

sales, even when some listings with lower prices go unsold. Some listings exceed the retail price of

14 AARON BODOH-CREED, J

¨

ORN BOEHNKE, AND BRENT HICKMAN

50 100 150 200 250

Days from Jan 1 2013

100

110

120

130

140

150

160

170

180

190

200

Dollars

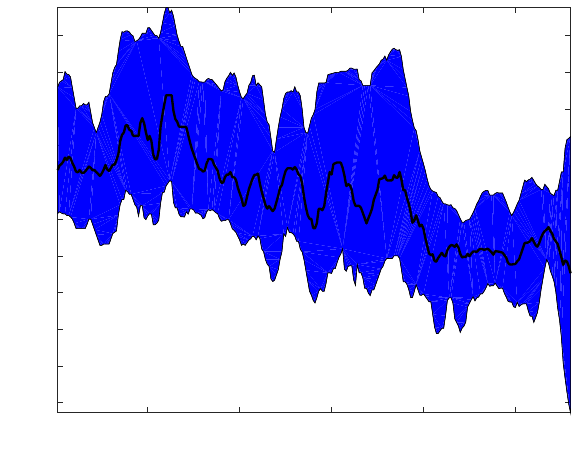

Figure 5. Price Trend Over Time

$159 on Amazon’s website, but are less likely to result in a sale. It is well documented that eBay

users sometimes pay more than fixed retail prices for goods, so it is not obvious that these high

price quotes are not optimal for sellers. For example, Malmendier and Lee (2011 [36]) find that

more than 40% of auctions exceed the simultaneous fixed price across a broad variety of products,

with overpayment averaging roughly 10% of retail price.

We describe our dataset in three sections. First, Section 4.1 outlines the features of listings that

we collected, and Section 4.2 elaborates on how we incorporate the text data into our analysis.

Section 4.3 closes with a discussion of the features of the eBay market and interface that make

features of the listings credible signals about the product and the seller in equilibrium.

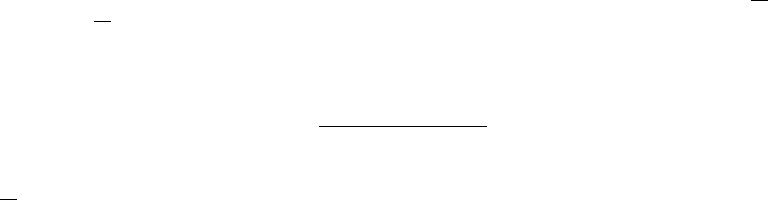

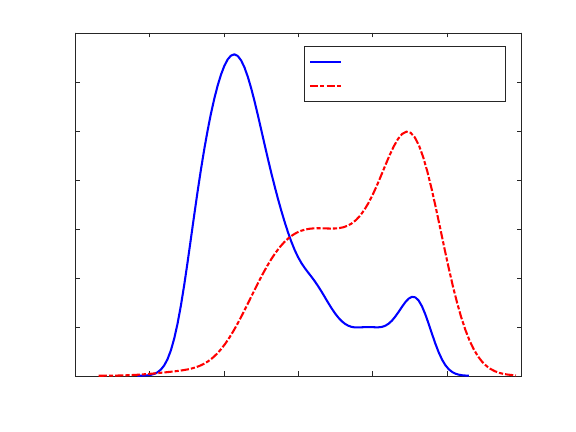

4.1. Variables. Figure 5 provides a time series plot of the median price of the listings on each

day, and the band describes the interquartile range of the distribution of prices. All of the time

series have been smoothed using a seven-day moving average filter. Two features are of note in

Figure 5. First, the market shows no sign of converging toward satisfaction of the LOP by the

end of our nine month sampling period. The persistence of price dispersion is well documented in

other online markets, so this is not terribly surprising. The second feature to note in Figure 5 is

the trend toward lower prices as the sample period persists. Again, this is not surprising since

the value of electronics products depreciates (even new ones) as the anticipated release dates of a

newer version approaches.

It is worth taking a moment to consider the ideal dataset for our purposes and how this informs

our handling of the time trend in our analysis. The ideal dataset would be a snapshot of the prices

offered in a market with hundreds of active listings, which would allow us to hold all time-varying

features of the market fixed and isolate what caused the seller to believe that an unusually high or

PREDICTING PRICE DISPERSION 15

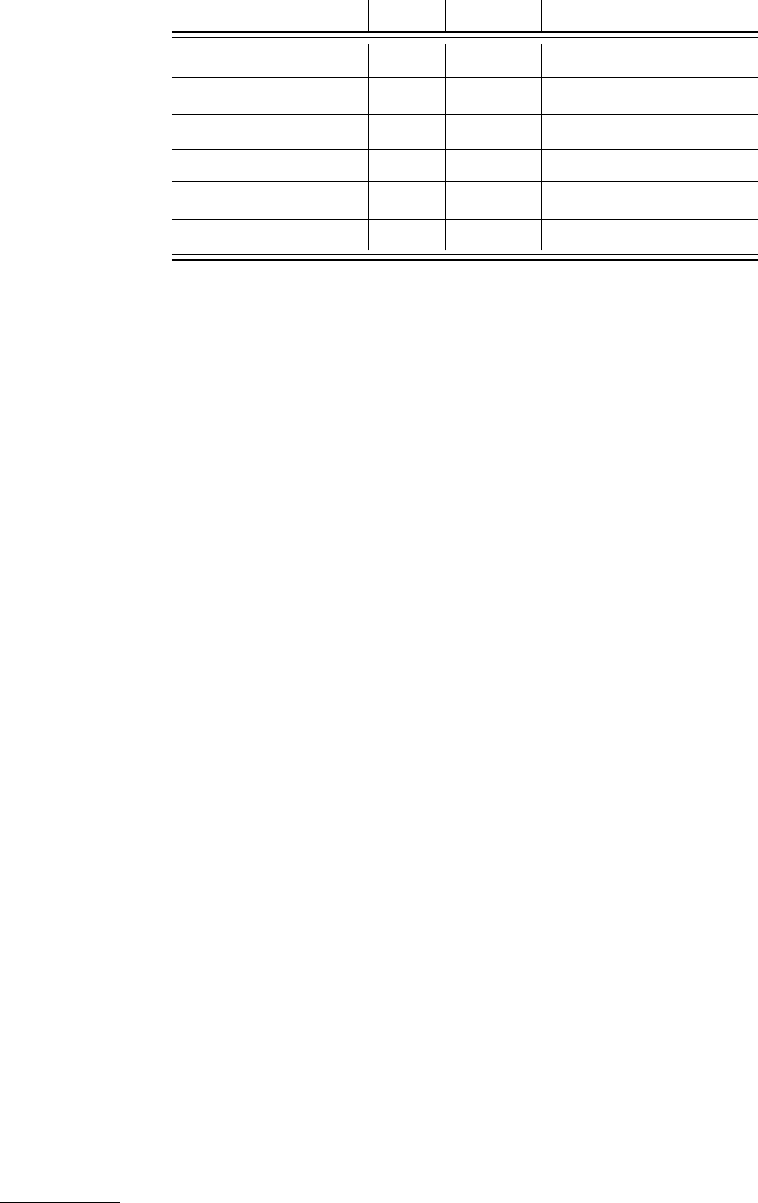

Variable Mean Median Standard Deviation

Price 0 -$1.74 $30.23

Shipping Price $3.71 0 $4.96

Shipping Calculated 0.437 0 0.496

Returns Allowed 0.303 0 0.460

log(Seller Score) 4.78 4.72 2.02

Re-listed 0.168 0 0.374

Table 1. Basic Data Set Summary Statistics

low price was warranted for that listing at that point in time. In other words, we want to explain

price-variation in a cross-section of listings and not across time. Unfortunately, the market does

not contain hundreds of listings on any given day, so we collect listings across days. If we were

to include time dummies or a time trend in our regressions, then we would be able to explain

some price variation simply through these time-dependent variables. However, since our research

focus is on cross-sectional price variation rather than variation over time, the appropriate course

is to de-trend our price variables. Once de-trended using a linear time trend in price, the standard

deviation of the detrended prices is $30.23, which is equal to 21.2% of the raw mean price.

7

For our Basic Data Set we only include variables that are analogous to observables used in earlier

papers that attempted to explain online price variation. Price is either the price at sale or, for

items that did not ultimately sell, the final price the seller offered before removing the unsold item

from the site. Shipping Price is the price of shipping if a flat rate was included in the listing and 0

otherwise. Shipping Calculated is a dummy variable equal to 1 if eBay automatically calculates the

shipping. Returns Allowed is a dummy variable equal to 1 if the seller accepts returns. Re-listed is

a dummy variable set to 1 if the seller chose to re-list the item after the item did not sell during the

listing’s initial duration. Seller Score is a numeric value indicating the net positive feedback left by

individuals that had purchased from this seller previously. Prior work has shown that this statistic

is not perfectly informative of seller quality (see, for example, Nosko and Tadelis 2015 [37]), which

leaves room for the seller to use other aspects of the listing (e.g., layout and content) to signal his

or her professionalism and reliability. In our empirical exercise we will explicitly control for both

sources of information about the seller’s professionalism. Variable names and summary statistics

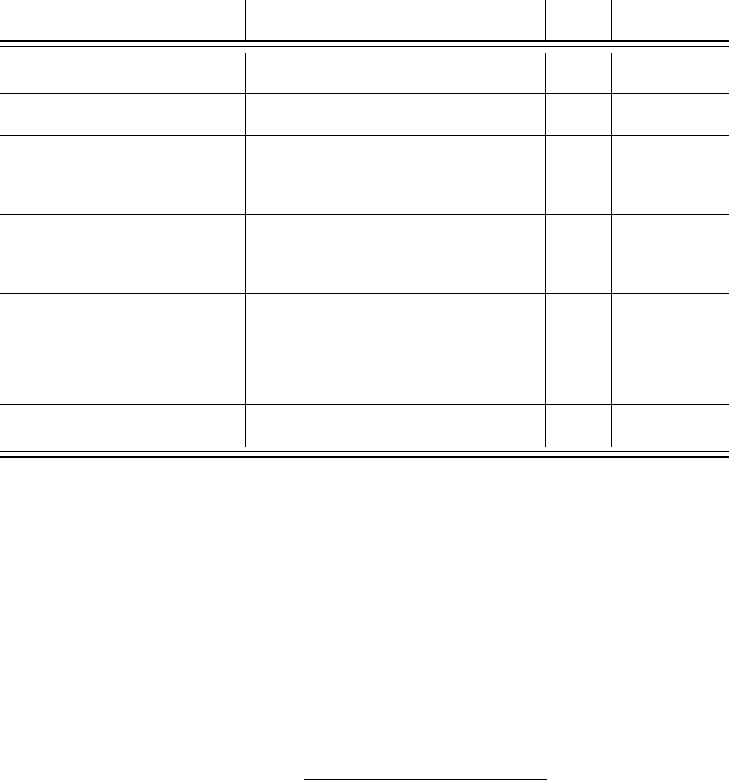

for the basic dataset are included in Table 1.

Our Full Data Set includes all of the information in the basic dataset as well as features gathered

from the portions of the listing that can be customized by the sellers. These data include the

number of characters, words, special characters, and fraction of upper case characters in the title

and the description of the listing. We record the number and size of the photos provided by the seller

7

We also experimented with higher-order polynomials and did not find any statistically or practically significant

differences from the linear trend model.

16 AARON BODOH-CREED, J

¨

ORN BOEHNKE, AND BRENT HICKMAN

as well as whether the seller used one or more stock images. A stock image is a professional image

of the item that one can download from the internet (e.g., from Amazon’s website), as opposed to

a non-stock image that the seller might take with his or her own camera of the actual item they

are selling. We also capture the number of HTML tags (e.g., sections of bold text), the number of

font sizes, and the number of changes in font size in the seller’s description of the product. These

variables all reflect techniques that a seller might use to make text eye-catching. We also record a

categorical variable describing whether the listing started on the weekend (Saturday or Sunday),

early in the week (Monday - Wednesday), or late in the week (Thursday or Friday). Finally,

we record whether the listing was generated by eBay’s mobile phone app. Summary statistics are

provided in Table 2.

4.2. Natural Language Data. The natural language data was handled using a Bag of Words

(BoW) approach (Gentzkow, Kelly, and Taddy 2017 [29]). First we separated each listing’s text

into sentences and words, and each word was stemmed using Porter’s Stemming Algorithm (Porter

1980 [39]). The stemming algorithm is capable of identifying different forms of the same word. For

example, the stemmer can identify “charges,” “charged,” and “charging” as sharing the same root

“charg.” Correctly stemming the text removes redundant features and insures an accurate count

of the number of appearances of each word. We do not attempt to identify negations (e.g., “no

returns”) algorithmically as this is much more computationally difficult and subject to a greater

error rate. After the stems have been identified and the number of occurrences of each stem in

each listing has been computed, we reduced the dimensionality of the natural language data in

two steps. First, we formed a list of the 1,000 most frequently appearing elements of the BoW.

After eliminating articles and prepositions, we manually reduced the set to 190 word stems that we

thought represent potential sources of heterogeneity and appear in at least 5 of the listings. For a

full list of the words, please see Appendix D.

8

Second, we used principal component analysis (PCA) to further reduce the dimensionality of the

BoW data.

9

PCA is a methodology for projecting a set of data points onto a set of orthogonal

basis vectors, usually referred to as components. To fix ideas, each BoW datum is a 190-dimensional

vector, call it w

i

= [w

1,i

, . . . , w

190,i

]

|

, where w

k,i

indicates the number of times the k

th

word stem

occurred in the seller’s description in the i

th

listing page, minus the mean count of the k

th

word

stem across all listings. The first principal component is chosen by picking a vector of weights or

factor loadings, call it π

1

= [π

1,1

, . . . , π

1,190

]

|

, to construct a linear combination of the regressors,

P C

1,i

= w

|

i

π

1

, that has the highest possible variance subject to the constraint that π

1

has unit

length. The second and following components, each represented by their own factor loading vector

π

j

, j ≥ 2, are constructed to be orthogonal to all previous components, and to have the highest

possible variance subject to the same unit length constraint.

8

We also experimented with using dummy variables for the appearance of a word in a listing as opposed to the word

count. We found it had no effect on our results.

9

We experimented with using the BoW entries as regressors directly but found that this did not result in improved

predictive performance in the random forest model. However, using the BoW counts as regressors in our OLS model

resulted in massive overfitting that caused the OLS out-sample-R

2

(see Section 5.2) to be negative.

PREDICTING PRICE DISPERSION 17

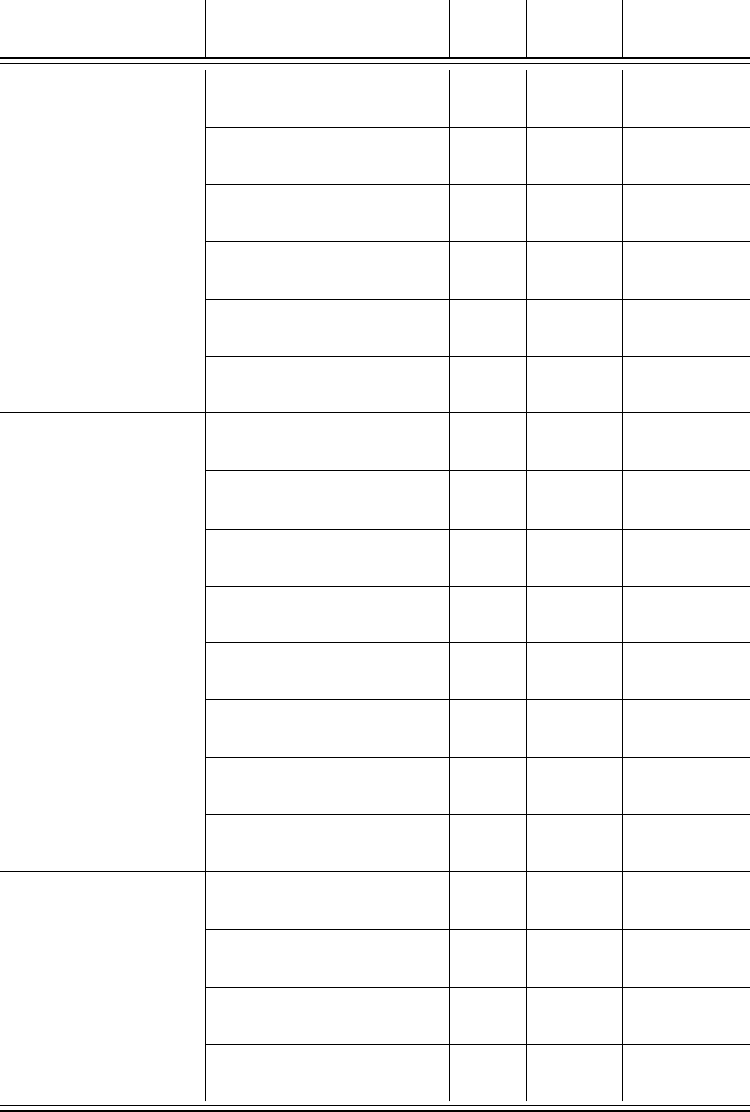

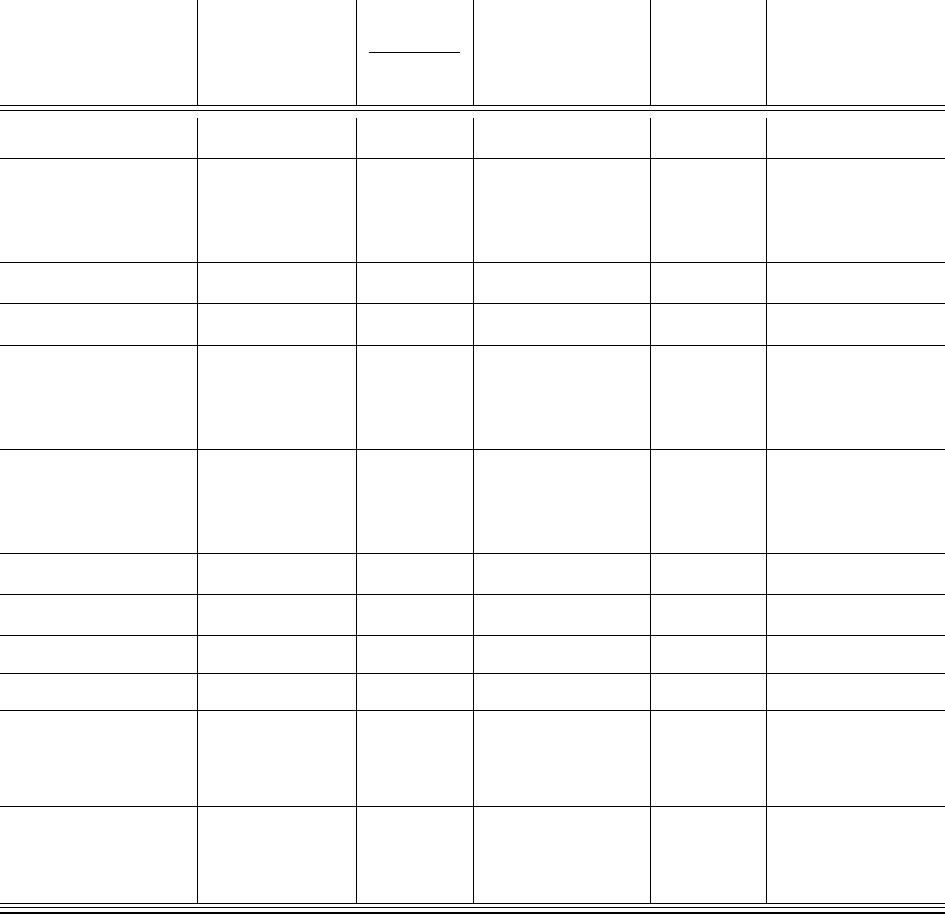

Variable Groups

Variable Mean Median

Standard

Deviation

Title

Length

Number of

Characters in Title

60.1 66 16.4

Number of

Words in Title

10.6 12 2.68

Title

Style

Number of Special

Characters in Title

15.0 16 4.63

% Uppercase

Characters in Title

0.312 0.263 0.168

Title

Images

Number of Photos

in Title

2.15 1 1.86

Number of Stock

Photos in Title

0.439 0 0.509

Description

Length

Number of Words

in Description

131 27 281

Description

Style

% Uppercase Characters

in Description

0.122 0.075 0.184

Number of HTML

Tags in Description

102 16 201

Number of

Font Sizes

1.58 1 1.451

Number of Font

Size Changes

4.33 0 16.0

Description

Images

Kilobytes of Photos

in Description

16.0 0 80.1

Dummy: 1 to 5

Photos in Description

0.103 0 0.304

Dummy: 5 or more

Photos in Description

0.063 0 0.243

Miscellaneous

Variables

Dummy: Posted During

Weekend

0.277 0 0.448

Dummy: Posted During

Early Week

0.429 0 0.495

Dummy: Posted During

Late Week

0.294 0 0.456

Dummy: Posted with

eBay Mobile

0.242 0 0.428

Table 2. Full Data Set Summary Statistics (without Bag of Words data)

18 AARON BODOH-CREED, J

¨

ORN BOEHNKE, AND BRENT HICKMAN

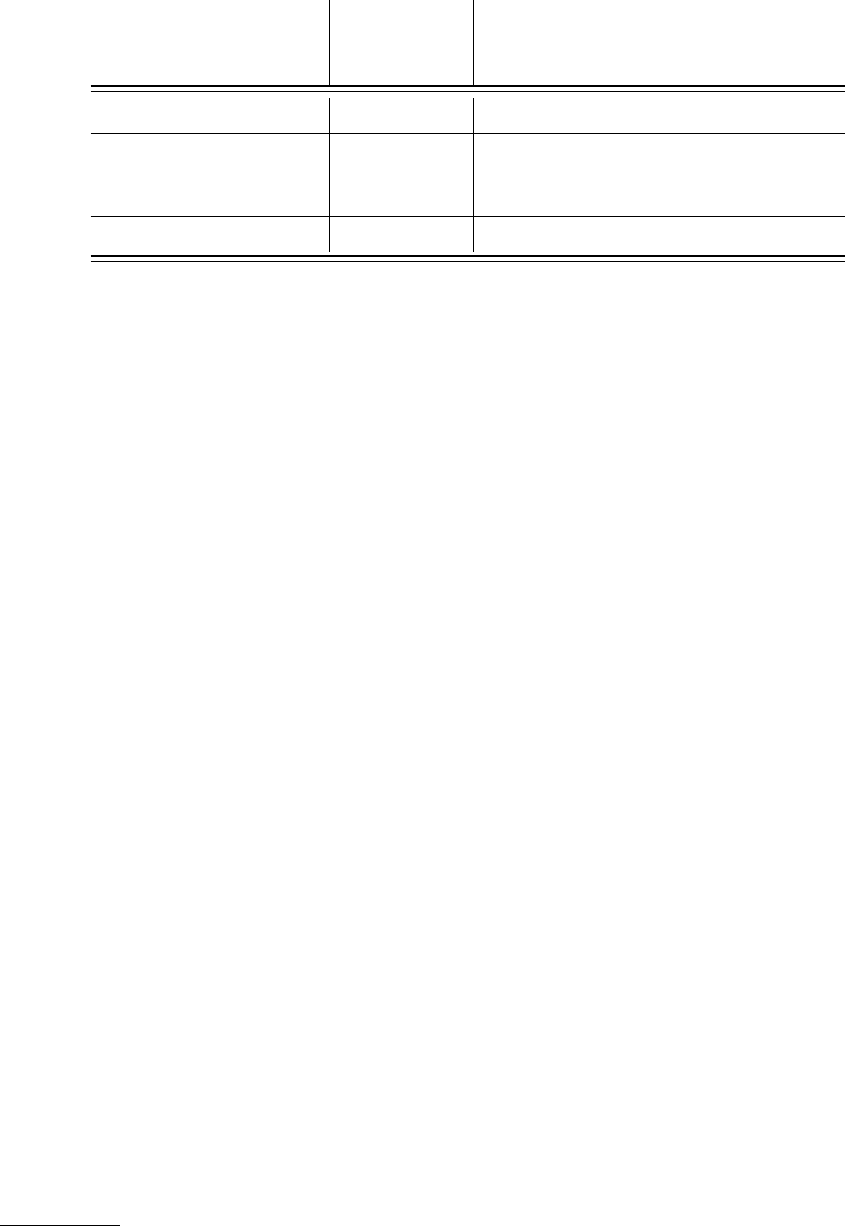

Component Name

% Variance

Explained

Words with

High Loadings

Description of Item 43.6 “new,” “read,” “include,” “content”

Shipping and Payment

Information

11.9 ”paypal,“ ”return,“ ”payment,” “buyer”

Technical Specifications 7.3 “display,” “connect,” “gb,” “charge”

Table 3. Interpretation of PCA components

Note that the orthogonality and unit-length constraints together imply that the variances of

successive principal components will be monotone decreasing, or VAR(P C

j

) > VAR(P C

j+1

), for

each j. Intuitively, what this means is that one can use the first few components to capture most

of the variance in a set of data with much higher dimensionality. Our analysis used the first 25

principal components, which collectively account for 90% of the variance of the 190 BoW variables.

We experimented with using the first 70 principal components in our analysis, which explain 98%

of variance, as a robustness check. The difference in the results was negligible.

The factor loading vector π

j

determines which variables have the most influence on component

j. When the factor loadings identify clusters of words that share a common theme, we can attribute

an interpretation to the corresponding principal component P C

j

. Table 3 describes the meanings

we attribute to the first three components by observing which word stems are given nontrivial

weight by the first three factor loadings, π

1

, π

2

, and π

3

. The fact that the principal components

with the most explanatory power have reasonable interpretations gives us confidence that the PCA

routine is reflecting meaningful attributes of the listings. Together these three components account

for more than 60% of the variation in the BoW data.

There are other methods one can use to reduce the dimensionality of text data. For example, one

could use topic modeling techniques such as latent Dirichlet allocation (LDA) to algorithmically

define topics and ascribe a metric of each topic’s influence on the text in each listing.

10

We

experimented with this approach, but found that the topics were not strongly associated with

any particular words. This is not terribly surprising since LDA techniques are known to work

relatively poorly when the text in each data point are short, which is the case in our data since

the median number of words in the description is only 27. Simply put, one needs larger samples of

text to be able to attribute topics to any given listing’s text.

4.3. Sources of Credible Signaling. The predictive power of the volume of text and the presence

of images can be understood easily in information-theoretic terms. For example, the text of the

listing could reveal flaws in the product that reduce the price, and an image of the product could

10

We thank a referee for this suggestion.

PREDICTING PRICE DISPERSION 19

verify that the box is factory sealed. The credibility of any such claim is re-enforced by eBay’s

reputation scheme, which we include as one of our controls.

The style variables that we include in our analysis could also indirectly convey information about

the seller’s professionalism and reliability above and beyond the information contained in the seller

score. If the style of a listing helps identify professional electronics retailers, for example, buyers

may have more faith that the Kindle is genuine retail stock that has not been opened or used in

any way. Another possibility for why the style of the listing may be important is psychological in

nature. Much as in the case of affective advertising, providing a stylized listing could make the

reader more engaged with the product or attach positive emotions to the listing. Either outcome

could plausibly alter a buyer’s willingness to pay.

There are a number of features of the eBay platform that make it relatively low in cost for a

frequent seller to use a long, stylized listing that is difficult for other sellers to copy, and these

features in turn make the style of a listing a credible signal of the seller’s reliability. First, sellers

that list a large number of goods often use the same listing template to convey standardized

information such as a warranty and links to other items the seller might have for sale at that

time. Since these listing templates need only be created once and can be used repeatedly, using

such a template for a Kindle Fire is low in cost for a frequent seller.

11

Second, it is not easy for

a technically unsophisticated user to replicate images, tags, and other formatting features from a

professional user’s listing. For example, copying the text style would require parsing the source

code of the listing’s web page, and then entering the copied HTML code into his or her own listing

using eBay’s HTML editor. This task is further complicated by the numerous features of a listing’s

webpage that are generated by eBay automatically—a typical stylized listing contains thousands of

lines of HTML code.

12

Given that any eBay seller with the technical expertise to parse the HTML

code presumably places a high value on their time, the struggle of replicating an elaborate listing

is probably not worth the time even if the sale price can be increased by a standard deviation (i.e.,

$30.23). Third, if an experienced seller includes links to other items or to an external website, these

features cannot be credibly copied by other sellers, further depressing the incentive to copy such a

listing. In summary, there is a variety of reasons to expect that the contents of a listing can serve

as a credible signal of either product or seller characteristics.

Taking the seller score as a measure of the seller’s experience on eBay, we can illustrate our

hypothesis that variables describing the style of the listing are associated with experience and

professionalism by comparing the distribution of these variables for sellers with a score below the

median against those sellers that have an above median score. Table 4 includes all of the style

variables as well as the variables describing the number of words used in each of these sections,

and the mean of each variable is displayed with the standard error of the mean noted underneath.

11

Listing templates are discussed on the eBay website at https://pages.ebay.com/help/sell/creating-products-

templates.html. The reader should note, however, that creating a detailed listing template is as costly as producing

a single complete listing, so it is of little use to low-volume sellers.

12

The listings in our data contained 210,000 characters and 12,000 words/tags on average, including standardized

content by eBay and the personalized content by individual sellers. Although comments are present in the code, they

are relatively sparse and we do not believe that it would help a neophyte decipher the webpage.

20 AARON BODOH-CREED, J

¨

ORN BOEHNKE, AND BRENT HICKMAN

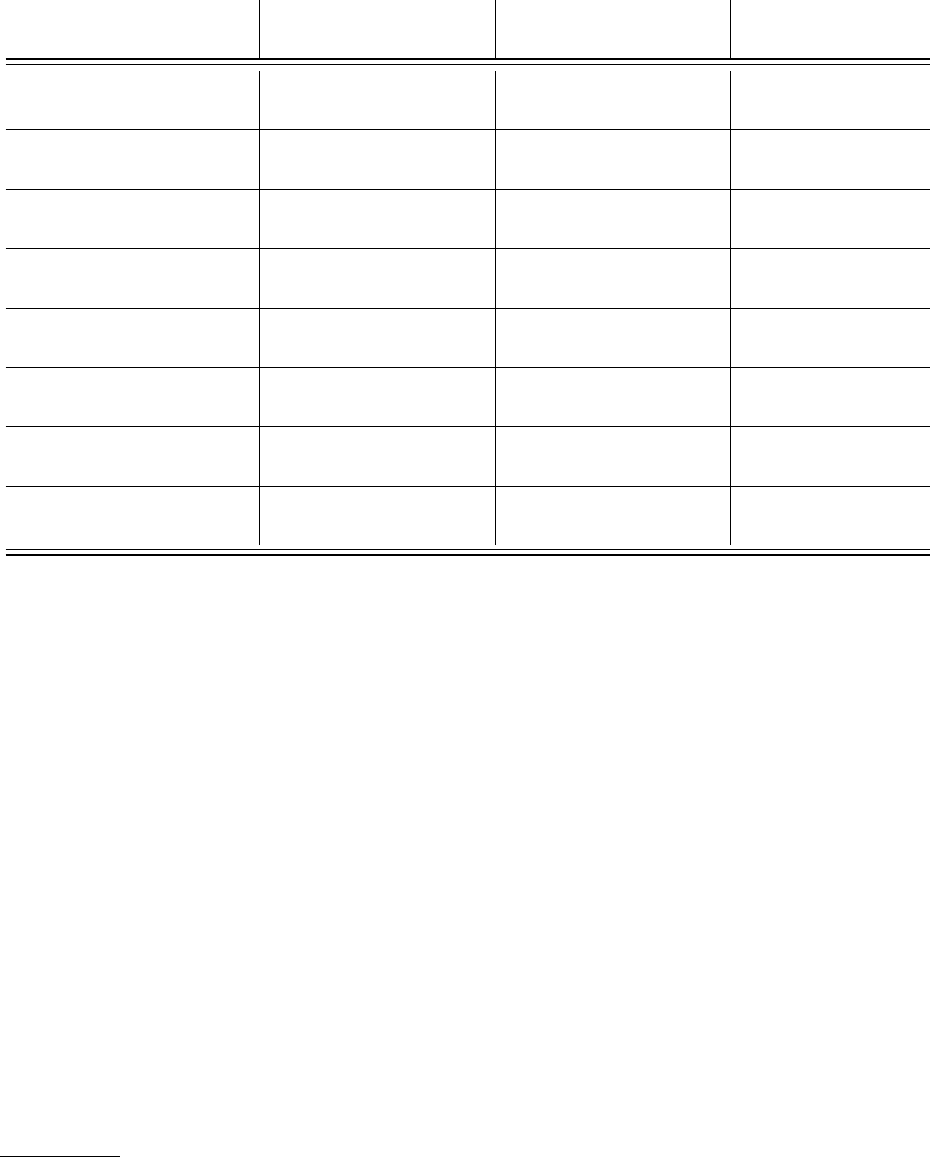

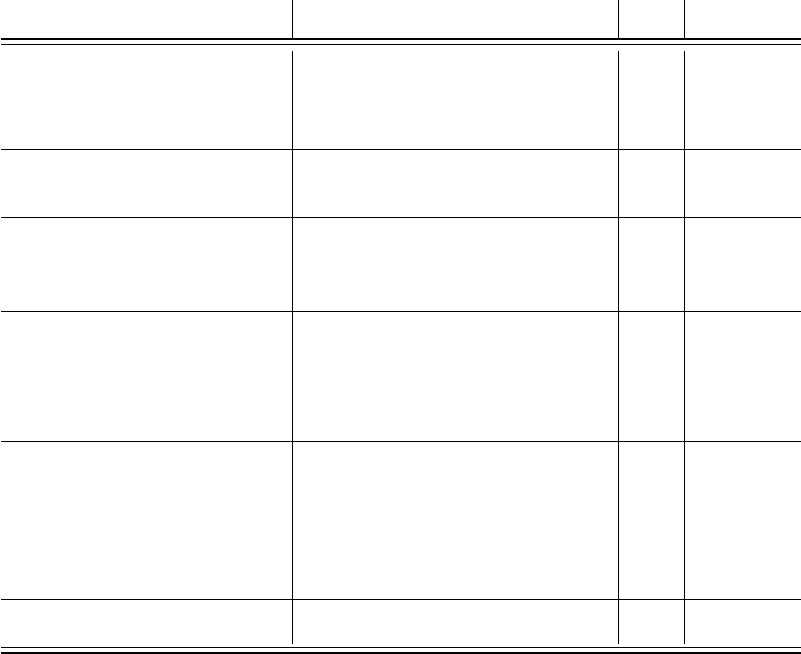

Variable

Mean for Low

Seller Score Agents

Mean for High

Seller Score Agents

P-Value of T-Test

Number of

Words in Title

9.96

(0.11)

11.19

(0.09)

9.91 ∗ 10

−17

Number of Special

Characters in Title

14.25

(0.19)

15.68

(0.17)

2.19 ∗ 10

−8

% Uppercase

Characters in Title

0.28

(0.006)

0.34

(0.007)

1.34 ∗ 10

−10

Number of Words

in Description

68.22

(6.28)

192

(13.8)

6.41 ∗ 10

−16

% Uppercase Characters

in Description

0.10

(0.007)

0.15

(0.008)

1.47 ∗ 10

−6

Number of HTML

Tags in Description

52.32

(5.58)

152.86

(9.26)

6.70 ∗ 10

−20

Number of

Font Sizes

0.30

(0.035)

0.85

(0.071)

4.63 ∗ 10

−12

Number of Font

Size Changes

2.14

(0.49)

6.51

(0.734)

8.78 ∗ 10

−7

Table 4. Style Variables, Split by Seller Score

P -values for a t-test for equality of the means is included as well. All of the differences in the means

of the variables are highly significant, and Table 4 reveals that experienced sellers use listings that

are both longer (e.g., with more words in the title) and more stylized (e.g., three times as many

HTML tags are used in the description).

Our discussion of the credibility of the signaling suggests an interesting new dimension of platform

design. One might have conjectured that improving the user interface so that it is easier to create

elaborate listings might have increased the value of the platform to users, which would in turn result

in higher profits for eBay. Our argument suggests that the opposite may occur—if nonprofessional

sellers can more easily create listings with a professional appearance, then the signaling value

of an elaborate listing template may be reduced. Without these signals of professionalism and

reliability, the large sellers might find that the platform generates less value, and this might drive

professional users away from the platform. Since eBay viewed these professional sellers as crucial

for the platform’s success at the time our data was collected, this suggests that allowing these users

to signal their professionalism is an important element of eBay’s success.

13

Therefore, imposing

some difficulty on new users trying to create a professional listing may actually be a clever platform

design choice.

14

13

To re-enforce this point, at the “eBay Seller Summit” in 2015 (after our sample period), Devin Wenig, the CEO of

eBay at the time, specifically mentioned a shift in focus towards small and medium sized sellers.

14

There are other ways a professional seller could convey their professionalism (e.g., acquring the eBay “Powerseller”

badge). However, it is unclear how effective these signals would be given buyers can easily observe the seller’s score.

PREDICTING PRICE DISPERSION 21

5. Analysis of Price Variation

5.1. Overview and Empirical Strategy. We now begin our main empirical analysis which aims

to shed light on the degree to which price variation may be due to subtle heterogeneity across

product listings, rather than being driven endogenously by search frictions. To fix ideas, for the

i

th

listing let X

i

denote a row vector containing the basic variables used in traditional studies of

online price dispersion, as outlined in Table 1. Let Z

i

denote a row vector containing the additional

variables in our full dataset for that listing, including variables in Table 2 and the first 25 principal

components of the BoW data, [P C

1,i

, . . . , P C

25,i

]. Finally, let y

i

denote the listing’s price. When

assessing our basic dataset, consider estimating a basic pricing model of the form

(1) y

i

= f(X

i

) + e

i

,

or an augmented model of the form

(2) y

i

= ϕ(X

i

, Z

i

) + ε

i

.

Economic theory indicates several plausible interpretations for the noise terms, e

i

and ε

i

, each

arising directly or indirectly from market frictions. First, if consumers have heterogeneous costs

for time spent searching on eBay, then this can generate asymmetrically informed consumers in

equilibrium, which several models show can endogenously create price dispersion (see Salop and

Stiglitz 1977 [45], Rosenthal 1980 [43], Wilde and Schwartz 1979 [53], Varian 1980 [51], Baye and

Morgan 2001 [8], and Baye et al. 2006 [11]). In this case the error term would be a random

function of the distribution of buyers each seller expects to encounter and not of the listing’s

attributes. Second, if seller reservation values are heterogeneous then price dispersion can arise in

the presence of search frictions (see MacMinn 1980 [35], Reinganum 1979 [41]). In this case the

error term would be a function of each seller’s random supply cost. Third, even if sellers and buyers

are ex-ante identical, other models of search frictions have been known to generate a mixed-strategy

equilibrium for price quotes offered by sellers of identical products (Burdett and Judd 1983 [17]).

The common thread in these scenarios is that each distinct theory indicates an interpretation of

pricing noise—random mixing or idiosyncratic supply costs—that is plausibly independent of the

vector of observable characteristics [X, Z]. In that sense, one may think of f and ϕ as accounting

for the role of observed listing heterogeneity, and the error terms e and ε as accounting for noise

that is generated (either directly or indirectly) by search frictions.

To answer our research question on the relative importance of heterogeneity and frictions we

need not identify a causal model, so our aim is not to achieve individual parameter estimates to

which causal demand interpretations may be attached. Rather, we attempt to determine what

combination of more ample observables and more flexible statistical models can exhaust predictive

power for cross-sectional price differences. To the extent that one may assume equilibrium price

variation due to market frictions is orthogonal to the observables, as we have argued above with

an appeal to economic theory, then the price variation we cannot explain is an upper bound on

the price variation generated by market frictions. To the extent that we have not exhausted the

22 AARON BODOH-CREED, J

¨

ORN BOEHNKE, AND BRENT HICKMAN

predictive power of the observables, we have only placed a weak upper bound on the effect of market

frictions.

There are two possible reasons why an attempt at estimation would falsely attribute too little

explanatory power to the model and too big of a role to the error term: omitted variables and

functional form mis-specification. If the regression functions took a linear-in-parameters form, say

f(X

i

) = X

i

β and ϕ(X

i

, Z

i

) = X

i

β + Z

i

γ for some suitably conformable parameter vectors, β and

γ, then the error terms would be related through the identity e

i

= Z

i

γ + ε

i

. The existence of an

omitted variables problem would therefore hinge on whether Z

i

had a meaningful impact on prices,

which in the linear model is the same as γ = 0. On the other hand, it could also be that linear

models like X

i

β + Z

i

γ are too restrictive to identify complex interactions between the observables

in nudging price up or down. In that case, a non-separable functional form for f or ϕ might be

required to achieve full explanatory power.

As discussed in Section 3, the order in which listings are served following a buyer’s search depends

primarily on whether a listing’s title included all of the words in the buyer’s query. This would

suggest that the content of the title may not be orthogonal to the market frictions since the order

in which items are served to the buyer could affect the difficulty of the search problem facing the

buyer. We discuss this issue further in Appendix A.2, and we perform a robustness check that

suggests that the predictability of the price is not driven by the sellers’ attempts to alleviate search

frictions and make their listings more visible.

5.2. Measuring Predictive Power. One might naturally expect to explain more price variation

than the prior literature given the rich set of regressors in our data and the use of machine learning

techniques. The more interesting question is how much of it can be explained, and whether the

additional explanatory power is due to the richer set of regressors, the machine learning techniques,

or the combination of the two. To assess the importance of the richer dataset, we compare the

predictive power of a linear model estimated on the full dataset to the predictive power of the same

kind of model estimated on the basic dataset. To evaluate the importance of the machine learning

algorithms, we compare the predictive power of a random forest estimated on the full dataset with

the predictive power of a linear model estimated on the same dataset. For completeness, we also

estimate a random forest model on the basic dataset. We use what we call the out-of-sample R

2

statistic as our measure of the fraction of the price variation we have explained.

Given our large set of regressors, both OLS and the random forest techniques we employ are

prone to overfitting. To obtain a meaningful measure of the predictive power of our models, we

compute an out-of-sample version of the R

2

statistic through 10-fold cross validation.

15

The 10-fold

cross validation procedure starts by randomly partitioning our data into 10 equally sized subsets

that we denote {F

1

, ..., F

10

}. For each k = 1, ..., 10 we hold out F

k

as a validation set and estimate

our model on the union of the remaining nine subsets of data. We then compute the sum of

squared errors, SSE

k

, and total sum of squares, T SS

k

, in the validation set F

k

using the estimated

15

We also tried using a 5-fold cross-validation, but found that the R

out

2

that resulted was within 0.01 of that found

using a 10-fold procedure.

PREDICTING PRICE DISPERSION 23

model. For a fixed partition of the data, the out-of-sample R

2

statistic is:

16

(3) R

2

out

= 1 −

P

10

k=1

SSE

k

P

10

k=1

T SS

k

= 1 −

P

10

k=1

P

y

i

∈F

k

(y

i

− by

i

)

2

P

10

k=1

P

y

i

∈F

k

(y

i

− y

i

)

2

where y

i

is the price of the i

th

listing in our data, by

i

is a predicted price for that listing, and y

i

is

the average price in the validation set.

17

We then repeat the cross-validation process 100 times

and average the resulting R

2

out

to compute the statistics we report.

The in-sample R

2

, the statistic usually reported by economists, is computed by estimating the

model on the full dataset, forming a prediction for the price of each listing in the same dataset,

and computing the R

2

based on these in-sample predictions. We report this traditional measure

for two reasons. First, comparing the in- and out-of-sample metrics for the OLS model reveals the

number of regressors is large relative to the sample size. Second, the relative magnitudes of the in-

and out-of-sample metrics for the random forest model illustrates the problem of overfitting when

the random forest both selects and estimates a flexible model on the same data.

5.3. Ordinary Least Squares. In order to assess the usefulness of standard econometric tech-

niques, we measure how much of the price variation can be explained using OLS. We interpret

the OLS regression as the best linear predictor and do not assume a causal interpretation of our

results. First we regress price against the regressors in the basic dataset as a benchmark. In line

with prior research, we are able to explain 12.5% of the price variation. Next, we apply OLS to

our full dataset, which yields an R

2

out

of 0.190. Table 5 summarizes our results and includes both

in- and out-of sample R

2

. As asymptotic theory would suggest for a model where the number of

regressors is small relative to the sample size, the in- and out-of-sample R

2

are close for the OLS

model estimated on the basic dataset since we can estimate the small number of regression coeffi-

cients very precisely. The additional information in the full dataset, with its vast increase in the

number of regressors, did provide a better fit, but the improvement was small. Moreover, the many

extra regressors cause the linear model to overfit the data, as evidenced by the large gap between

in-sample and out-of-sample R

2

for OLS estimated on the full dataset. The regression coefficients

for the basic dataset are listed in Table 6, and the coefficients all possess the expected sign.

5.4. Random Forest. A random forest is an ensemble estimator; in other words, it is the average

of a large collection of underlying regression tree models (Breiman 2001 [15]). Before describing

how an ensemble of regression trees is constructed, let us describe the algorithm for creating a

single regression tree. A regression tree partitions the space of possible regressor values and assigns

each element of the partition a prediction value equal to the average of the outcome variables in

16

We also computed the average out-of-sample R

2

:

R

2

out

= 1 −

1

10

10

X

k=1

SSE

k

T SS

k

The resulting values differed from R

2

out

by less than 0.5%.

17

Breiman (2001 [15]) proposed using out-of-bag measures to assess goodness of fit. We prefer our R

2

out

metric since

it can be computed for the OLS model as well.

24 AARON BODOH-CREED, J

¨

ORN BOEHNKE, AND BRENT HICKMAN

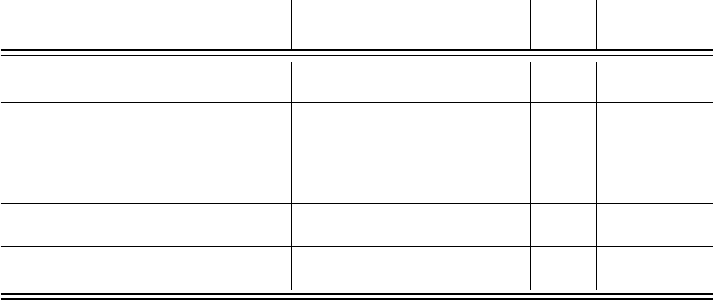

R

2

Version

Data Set

Out-of-Sample In-Sample

Basic 0.1297 0.1446

Full 0.1898 0.2917

Table 5. OLS Predictive Power

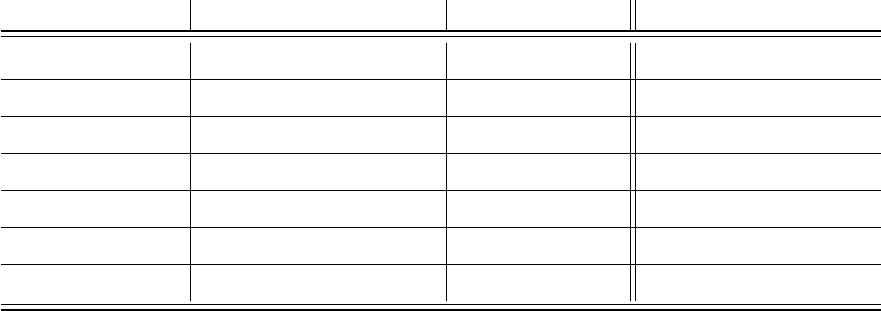

Parameter Point Est Std. Err. P-Value 95% Confidence Interval

Shipping Price −1.189

∗∗∗

0.212 < .001 [-1.604, -0.764]

Shipping Calculated −12.02

∗∗∗

1.939 < .001 [-15.82,-8.21]

Returns Allowed 8.229

∗∗∗